adamgdunn

-

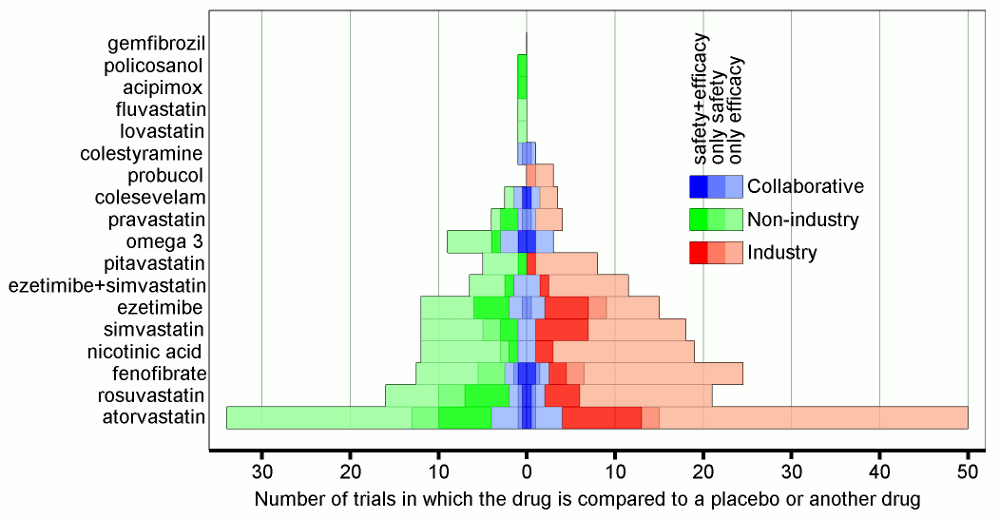

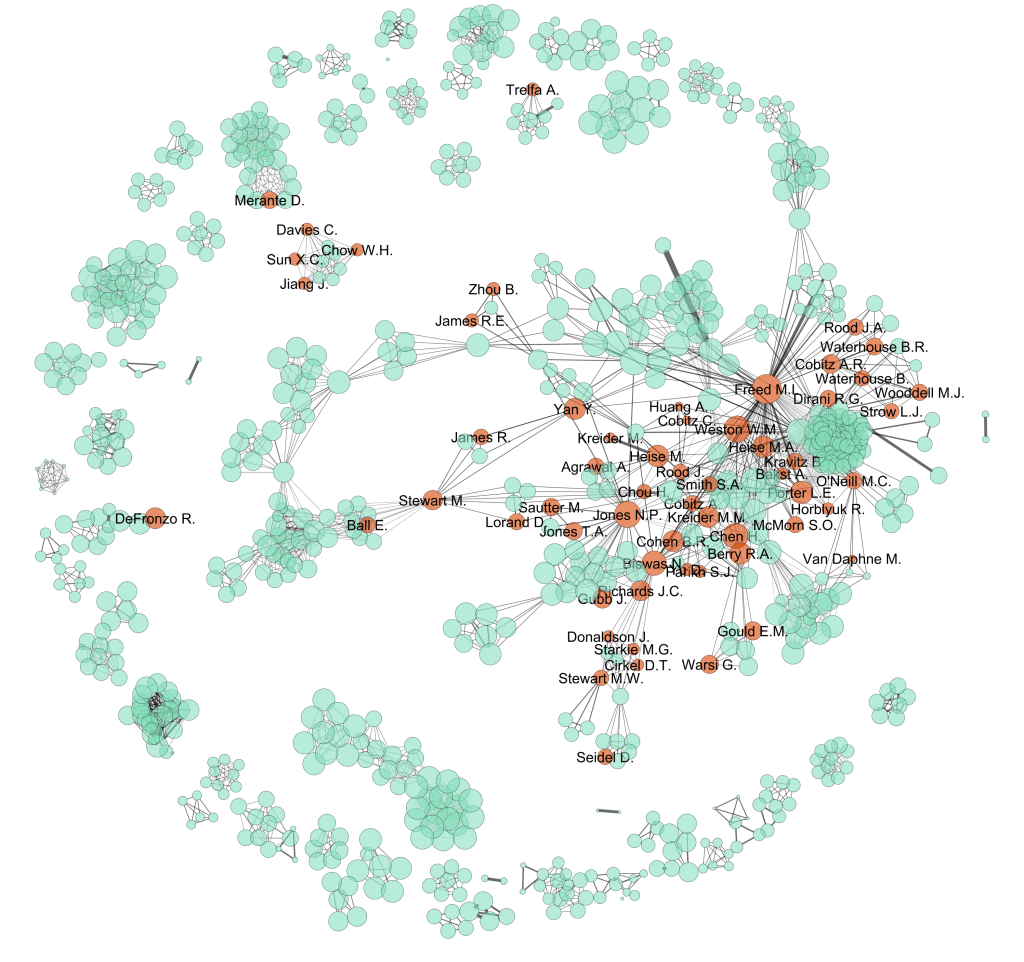

Who creates the clinical evidence for cholesterol-lowering drugs?

Last week the US Food and Drug Administration released new warnings about the use of statins for patients in the United States. The warnings that have been added to labels in the US come from worries about liver injury, memory-loss and confusion, increased blood sugar levels and some new potentially dangerous interactions between one statin…

-

Do pharmaceutical companies have too much influence over the evidence base?

Imagine you are a doctor and you have a patient sitting with you in your office. You have already diagnosed your patient with a condition. Treatment for this condition will definitely include prescribing the patient with one or more drugs. And, because the condition is quite common, there are several government-subsidised drugs from which you…