networks

-

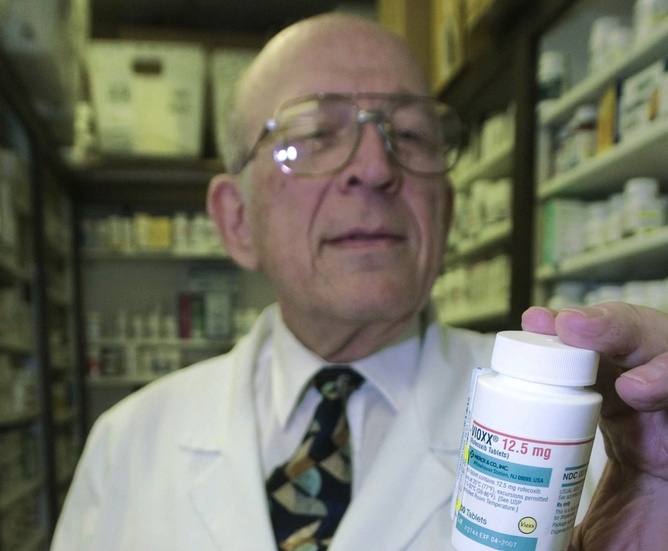

Who creates the clinical evidence for cholesterol-lowering drugs?

Last week the US Food and Drug Administration released new warnings about the use of statins for patients in the United States. The warnings that have been added to labels in the US come from worries about liver injury, memory-loss and confusion, increased blood sugar levels and some new potentially dangerous interactions between one statin…

-

Spatial ecological networks – where physics, ecology, geography and computational science meet

It’s part physics, part ecology, and part geography – and that’s probably why it is so much fun. Whenever I fly from city to city my favourite part of the trip is looking out of the window to see the patterns made in the landscapes. Most of the time, the patterns are carved out by…

-

Dinner for NetSci2011 at Sofitel Budapest

Pictured below is Robin Dunbar (Oxford) making jokes about monogamy watched by Albert-László Barabási (Harvard, Northeastern), Uri Alon (Weizmann Institute), Alain Barrat (Centre national de la recherche scientifique) and Andrea Baronchelli (UPC Barcelona). Not pictured, but still in the room are other well known luminaries such as Brian Uzzi (Kellogg School of Management), and Hawoong…

-

Spreading, Influencing and Cascading

The NetSci2011 satellite workshop on spreading, influencing and cascading in social and information networks. Here is Brian Uzzi, whose discussion of the adoption of scientific ideas provided some good laughs, and set some brains ticking over how they might improve the likelihood of increased citation rates for themselves. If only it were as simple as…

-

The Central European University is the location for NetSci2011. The first day includes a school and workshops. I’ll be attending Circuits of Profit, which promises to be a practical look at the practice of network analysis in business applications. Hopefully we’ll see lots of good science and not too many palm readers. I’m looking forward…